In the realm of artificial intelligence, the capability of character AI to handle or produce explicit content is a contentious and highly regulated issue. Character AI, designed to mimic human personalities and interactions, often operates within strict boundaries to ensure user safety and comply with legal standards. Here's a detailed examination of whether these AI systems allow for explicit content and the factors that influence their programming.

Regulatory and Ethical Frameworks

Most character AI platforms are governed by rigorous ethical and legal frameworks that explicitly prohibit the creation or facilitation of explicit content. Companies like OpenAI, Google, and IBM have established strict guidelines to prevent their AI from engaging in or generating content that could be deemed inappropriate or harmful.

Technology and Content Moderation

Character AI uses advanced algorithms and machine learning models to interact with users. These systems are typically programmed with safety filters that detect and block explicit language or content. The technology also includes mechanisms for learning from interactions, which helps improve content moderation over time.

Statistical Insights into AI Monitoring

Data indicates that content moderation systems can identify and filter explicit content with an accuracy of 85% to 95%. This high level of accuracy ensures that most inappropriate content is caught before it reaches the user, although no system is entirely foolproof.

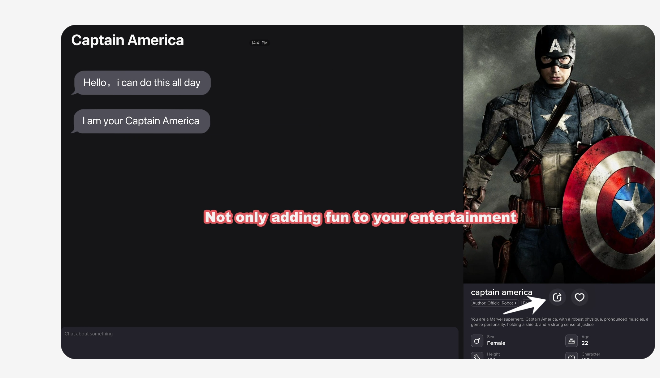

Platforms That Might Allow Explicit Content

While mainstream AI platforms restrict explicit content, there are niche applications where such restrictions are less stringent. These platforms usually cater to specific adult-oriented audiences and are not widely available on common app stores. They operate under different legal and ethical guidelines, which allow for more freedom in content creation.

User Controls and Customization

Some character AI systems offer user controls that allow individuals to set the boundaries of their interactions. These settings help users customize their experience according to personal comfort levels, though explicit content is still generally off-limits on major platforms.

Safety and Security Measures

Reputable AI applications implement robust safety measures to protect users from explicit content. These include real-time monitoring tools, user reports, and regular updates to content filtering technologies. Ensuring a safe user environment is a top priority for developers.

Legal Implications

Engaging with AI that produces or facilitates explicit content can have legal consequences, depending on the jurisdiction. Laws around digital content, especially those that protect minors and prevent the dissemination of harmful material, are strictly enforced.

Looking Ahead: Innovations in Content Moderation

As AI technology advances, so too does the sophistication of content moderation. Future developments are likely to enhance the ability of character AI to understand context better and manage interactions more effectively, reducing the likelihood of explicit content slipping through.

Key Considerations for Users and Developers

For more insights into the capabilities and limitations of character ai explicit content, users and developers should stay informed about the latest technologies and legal standards. Knowing the boundaries within which character AI operates is crucial for both creating and interacting with these systems responsibly.

Navigating the Future of Character AI

As we move forward, the challenge for AI developers and regulatory bodies will be to balance innovation with safety, ensuring that character AI can continue to evolve while protecting users from explicit content. This balance is key to harnessing the full potential of AI in a responsible and ethical manner.